JSALT 2024 Plenary Lectures

Schedule of Speakers

All lectures will be live-streamed via CLSP’s YouTube channel.

Click to expand for lecture title, abstract, and speaker biography.

-

Speaker: Lale Akarun, Boğaziçi University

Location: Hackerman B17

Title: 3D Human Body Modeling

Abstract: Understanding the actions of humans is important for a range of applications: Surveillance, human-computer or human-robot interaction, or sign language understanding. Even before the availability of 3D sensors, analysis-by-synthesis approaches used 3D human body models. With the availability of 3D sensors, inferring and using 3D models of the human body became the standard. In this talk, I will start from the basics of 3D vision: Acquisition, representation, and inferring 3D from monocular images. Then I will move on to 3D human body models; recent work on human pose and shape estimation. I will introduce work on human motion modeling and conclude with how these use LLMs for human motion synthesis.

Bio: Lale Akarun is a professor of Computer Engineering at Bogazici University, Istanbul. She received the PhD degree in Electrical Engineering from the Polytechnic School of Engineering of NYU, in 1992. She has served as Vice President for Research at Bogazici between 2012-2016. Since 2018, she is the vice president of the International Association for Pattern Recognition. Her research areas are in image processing and computer vision, and in particular, processing of faces and gestures. She has supervised over 70 graduate theses and published more than 200 scholarly papers in scientific journals and refereed conferences. She has conducted research projects in biometrics, face recognition, hand gesture recognition, human-computer interaction, and sign language recognition.

-

Speaker: Megan Ansdell, National Aeronautics and Space Administration (NASA)

Location: Hackerman B17

Title: Enabling Space Science with Large Language Models

Abstract: This talk will cover the development and application of Large Language Models (LLMs) to the space sciences, in particular through the activities of the NASA Science Mission Directorate (SMD). This will include an overview of the recently developed NASA SMD LLM, descriptions of its current and potential future applications for enabling scientific discovery and space-based missions, and discussion of the key challenges for both researchers and funding agencies going forward.

Bio: Megan Ansdell is a Program Officer at NASA HQ in Washington, DC, where she serves as the AI/ML Lead for Planetary Science as well as the Program Scientist for the Habitable Worlds Observatory and the Planetary Protection Lead for the Mars Sample Return Campaign. Before joining NASA, Megan was an ML Research Fellow at the Flatiron Institute in New York City, where she held a joint position with the Center for Computational Astrophysics and Center for Computational Mathematics, and was also previously a Postdoctoral Fellow at UC Berkeley’s Center for Integrative Planetary Sciences. Megan obtained her PhD in Astrophysics from the University of Hawaii in 2017 with a thesis on demographic studies of protoplanetary disks. Before her PhD, Megan earned a Master’s degree in International Science and Technology Policy from the George Washington University, where she researched and advocated for international cooperation in human space exploration. Megan has also obtained a Master’s degree in Space Studies from the International Space University in France and a Bachelor’s degree in Astrophysics from the University of St. Andrews in Scotland.

-

Speaker: Gordon Wichern, Mitsubishi Electrical Research Lab (MERL)

Location: Hackerman B17

Title: Modeling Hierarchies of Sound for Audio Source Separation and Generation

Abstract: A sound signal carries information at multiple levels of granularity, for example music contains different instrument signals, each composed of individual notes, where each note is composed of individual frequency components. As black-box, data-driven approaches have come to dominate nearly all aspects of audio signal processing, incorporating prior knowledge of these hierarchical relationships is critical to obtain explainable and controllable models. In the first part of this talk, I will discuss our work on audio source separation, where we hierarchically extract the different components that compose a complex sound scene, and detail how these approaches enable novel controls for interacting with source separation models. I will then shift to a discussion on audio generative models, were we demonstrate the necessity of using features at multiple levels of granularity to detect and quantify training data memorization in a large text-to-audio diffusion model. Finally, I will discuss how we use classifier probes to understand what a large music transformer knows about music, and describe approaches for using the probes to build fine-grained interpretable controls.

Bio: Gordon Wichern is a Senior Principal Research Scientist at Mitsubishi Electric Research Laboratories (MERL) in Cambridge, Massachusetts. He received his B.Sc. and M.Sc. degrees from Colorado State University and his Ph.D. from Arizona State University. Prior to joining MERL, he was a member of the research team at iZotope, where he focused on applying novel signal processing and machine learning techniques to music and post-production software, and before that a member of the Technical Staff at MIT Lincoln Laboratory. He is the Chair of the AES Technical Committee on Machine Learning and Artificial Intelligence (TC-MLAI), and a member of the IEEE Audio and Acoustic Signal Processing Technical Committee (AASP-TC). His research interests span the audio signal processing and machine learning fields, with a recent focus on source separation and sound event detection.

-

Speaker: Hanseok Ko, School of Electrical Engineering, Korea University

Location: Hackerman B17

Title: An Overview and Challenges of Interactive HMI for Realizing Human-Like Interface

Abstract: Humans have always desired to interact with intelligent machines in the way that they become assistants and sometimes companions. The interactive human-machine interface (HMI) has drawn significant attention due to the recent advances in LLM and generative AI, which substantially positively impact the realization of human-like interactive machines. In this talk, I will give an overview of the HMI, its history, and how recent advancements in transformers have played a pivotal role in its development. Then, I will review a pipeline of the various HMI interaction-enabling components, such as perception, understanding, and response. Afterwards, I will talk about the work on TTS to highlight some of the recent research efforts at the Lab. Finally, the challenges of HMI technology are summarized to identify some research prospects.

Bio: Hanseok Ko is a Professor of ECE at Korea University, Seoul, and has been credited as the leading developer of the core acoustics and speech interface for Hyundai/Kia Motors. Since joining the faculty of Korea University, Dr. Ko has been serving as the Director of the Intelligent Signal Processing Lab, sponsored by industries and national grants to address and engage research in AI/ML, multimodal signal processing (acoustics, speech, and image processing), and man-machine interface for robotics, consumer electronics, and healthcare applications. Dr. Ko served as General Chair of the IEEE ICASSP 2024 and General Chair of the Interspeech 2022. Dr. Ko co-founded two successful startups: Mediazen, which became successfully listed on KOSDAQ, and BenAI, which has been listed on NASDAQ since March 2024.

-

Speaker: Debra JH Mathews, Johns Hopkins University

Location: Hackerman B17

Title: Emerging Technology Governance: Opportunities and Challenges of AI in Medicine

Abstract: From genetic engineering to direct to consumer neurotechnology to ChatGPT, it is a standard refrain that science outpaces the development of ethical norms and governance. Further, technologies increasingly cross boundaries from medicine to the consumer market to law enforcement and beyond, in ways that our existing governance structures are not equipped to address. Finally, our standard governance approaches to addressing ethical issues related to new technologies fail to address population and societal-level impacts. This talk will demonstrate the above through a series of examples and describe ongoing work by the US National Academies and others to address these challenges.

Bio: Debra JH Mathews, Debra JH Mathews, PhD, MA, is the Associate Director for Research and Programs for the Johns Hopkins Berman Institute of Bioethics, and a Professor in the Department of Genetic Medicine, Johns Hopkins University School of Medicine. Within the JHU Institute for Assured Autonomy, she serves as the Ethics & Governance Lead. Dr. Mathews’s academic work focuses on ethics and governance issues raised by emerging technologies, with particular focus on genetics, stem cell science, neuroscience, synthetic biology, and artificial intelligence. In addition to her academic work, Dr. Mathews has spent time at the Genetics and Public Policy Center, the US Department of Health and Human Services, the Presidential Commission for the Study of Bioethical Issues, and the National Academy of Medicine working in various capacities on science policy.

Dr. Mathews earned her PhD in genetics from Case Western Reserve University, along with a concurrent Master’s in bioethics. She completed a Post-Doctoral Fellowship in genetics at Johns Hopkins, and the Greenwall Fellowship in Bioethics and Health Policy at Johns Hopkins and Georgetown Universities.

-

Speaker: Alberto Accomazzi, Harvard-Smithsonian Center for Astrophysics

Location: Hackerman B17

Title: The NASA Astrophysics Data System: A Case Study in the Integration of AI and Digital Libraries

Abstract: The NASA Astrophysics Data System (ADS) is the primary Digital Library portal for researchers in astronomy and astrophysics. Over the past 30 years, the ADS has evolved from an astronomy-focused bibliographic database to an open digital library system supporting research in space and, soon, earth sciences. I will begin by discussing why in an era in which information is widely accessible, discovery platforms such as the ADS are still needed, and what function they fulfill in the research ecosystem. I will describe the evolution of the ADS system, its capabilities, and the infrastructure underpinning it, through multiple technology transitions and its role within an ecosystem of scholarly archives and publication services. I will then discuss the incorporation of Machine Learning (ML) and Natural Language Processing (NLP) algorithms into the operations of the system for information extraction, data normalization and classification tasks. Next I will highlight how emerging AI techniques will be adopted to implement metadata enrichment, search functionality, notifications, and recommendations. Finally, I will conclude by showing how the ADS system, and its successor SciX, can be used as an open platform for a variety of data science studies and applications.

Bio: After receiving a Doctorate in Physics from the University of Milan in 1988, Alberto Accomazzi led a number of image processing and analysis projects at the Center for Astrophysics, applying computer vision techniques to astronomical image classification problems. In 1994 he joined the NASA Astrophysics Data System (ADS) project as a developer and focused on the implementation of the ADS Search Engine and Article archive. In 2007 he became program manager of the ADS and in 2015 took the additional role of Principal Investigator. He currently serves on the scientific advisory board of the high-energy INSPIRE information system, and is the incoming president of IAU Commission B2 (Data & Documentation). He referees for various scholarly publications and is an editor of the journal Astronomy & Computing.

-

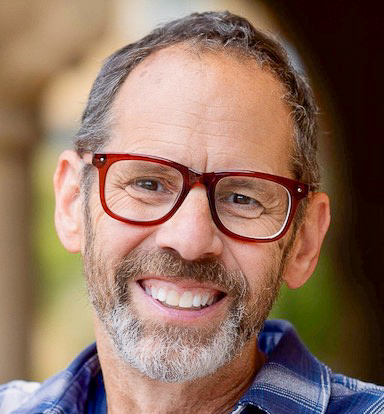

Speaker: Dan Jurafsky, Stanford University

Location: **Gilman Hall 50**

Title: Applying NLP to Social Questions: Police-Community Relations and the Politics of Immigration

Abstract: How can we apply speech and language models to important social questions? I first describe a series of studies in collaboration with Prof. Jennifer Eberhardt and her lab and Prof. Anjalie Field here at JHU that combine linguistics, social psychology, NLP, and speech processing to analyze traffic stop interactions from police body-worn camera footage. I’ll describe the differences we find in police language directed toward black versus white community members, demonstrate the relationship with escalation, and introduce a training intervention that can improve the relations between police officers and the communities they serve. In a similarly multidisciplinary study in collaboration with economists, we examine the language used by US politicians to describe immigrants over 140 years of US history. We trace the time-course of polarization on immigration, offer novel LM-based tools for measuring dehumanization metaphors, and show a homology between the language used to describe Chinese immigrants in the 19th century and Mexican immigrants in the 21st. I hope to inspire us to think more about applying speech and language models to help us interpret the latent social content behind the words and speech we use.

Bio: Dan Jurafsky is Professor of Linguistics, Professor of Computer Science, and Jackson Eli Reynolds Professor in Humanities at Stanford University. He is the recipient of a 2002 MacArthur Fellowship, a member of the American Academy of Arts and Sciences, and a fellow of the Association for Computational Linguistics, the Linguistics Society of America, and the American Association for the Advancement of Science. Dan is the co-author with Jim Martin of the widely-used textbook “Speech and Language Processing”, and co-created with Chris Manning the first massively open online course in Natural Language Processing. His trade book “The Language of Food: A Linguist Reads the Menu” was an international bestseller and a finalist for the 2015 James Beard Award. Dan received a B.A in Linguistics in 1983 and a Ph.D. in Computer Science in 1992 from the University of California at Berkeley, was a postdoc 1992-1995 at the International Computer Science Institute, and was on the faculty of the University of Colorado, Boulder until moving to Stanford in 2003. He and his students study topics in all areas of NLP, including its social implications and its applications to the cognitive and social sciences.